Software Test Automation

Session 1: Tools That Can Help

Tools throughout the lifecycle: Where in the software development life cycle test activities take place and which are tool supportable.

Tool support for testing: The different types of tool support for testing activities throughout the software development lifecycle.

Benefits and pitfalls: The benefits and pitfalls that can be expected with the different types of test tool.

Session 2: How Much Help Can Tools Provide?

What help is required?: The reasons for introducing a test tool and whether this is the best solution to the problem.

Are tools the best solution?: Two different approaches to comparing different solutions against a set of problems.

Quantifying the return on investment: A simple approach to estimating the costs and benefits of testing with and without tool support.

Example business cases: A few simple business cases for different tool types.

Assessing readiness: How to assess the best time to Introduce change

Session 3: Introducing a Test Tool

Choosing the right tool: The need for a project to select a test tool and the steps involved.

Role of a pilot project: The test tool implementation process and the importance of a pilot project.

Developing best practices: A few objectives for the pilot project and the areas where early adoption of best practices is key to long term suclearcase/" target="_blank" >ccess.

Lessons to learn: A few case histories where things went wrong and the lessons that can be learnt from them.

Session 4: Automating the Test Process

The test process: A detailed description of the steps taken when creating and performing tests and their suitability for automation.

Example application: A brief description of a simple application with an explanation of one simple test that could be performed on it.

Automating test execution: The problems incurred by using record / playback facilities alone.

Automating test verification: Some of the many and varied choices that can be made.

Automated but not Automatic: The problems involved in making the same automated test work under different situations and an introduction to possible solutions.

Session 5: Case History

Background: The reasons behind one organisation's decision to automate testing and the solutions they implemented.

Results: The effort put into automation and the savings achieved.

Lessons: Things learnt in this case history that need to be addressed by most other test automation projects.

Session 6: Scripting Techniques

Example application: A brief description of a simple application with an explanation of one simple test that could be performed on it.

Introduction to scripting: A few facts about scripting that help explain its importance in test execution automation.

Scripting techniques: Five approaches to scripting (linear, structured, shared, data-driven and keyword-driven). The pros and cons of each technique using examples to illustrate the points made.

Script pre-processing: An explanation of script pre-processing and the benefits that can be achieved.

Session 7: Automated Comparison

Automated test verification: Some simple guidelines that can help you avoid making costly mistakes.

Test sensitivity: The importance of achieving the right degree of verification and how it should be varied across different test cases.

Implementing post-execution comparison: Alternative approaches to automating post-execution comparison.

Simple versus complex comparisons: The benefits and pitfalls of using simple and complex comparisons and a few approaches to achieving them.

Post execution comparisons: A practical approach to implementing comparisons

Session 8: Testware Architecture

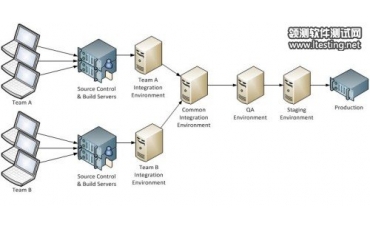

Introduction: How testware is organised and structured. Three key issues that have to be addressed in order to avoid serious problems. An approach to organising testware that addresses each of these three issues.

Implementation ideas: Explains one possible implementation of testware architecture.

Session 9: Pre- and Post-Processing

Introduction: The activities that are collectively called pre- and post-processing and the importance of automating them. Examples of pre- and post-processing.

Implementation: Two different approaches to implementing pre- and post-processing.

Session 10: Other Issues

Test selection: The order in which tests should be run and how to efficiently select them for execution.

Order of running: Considerations to take account of when executing a number of automated test cases.

Test status: The end result of a test should not be limited to a simple pass or fail.

Monitoring progress: Metrics for reporting progress and how to obtain and present them.

Session 11: Test Suite Maintenance

Attributes of test maintenance: Common reasons for high maintenance costs and how to deal with them

Strategy and tactics: The general approach to keeping on top of test suite maintenance costs